SEO Audit Checklist in 7 Simple Steps

If you are planning to optimise a website, an SEO technical audit is the all-important first step.

It ensures a site is in line with industry best practices, thereby giving the webmaster a solid foundation on which to build a successful SEO campaign.

This SEO audit checklist is designed to take you through from beginning to end, ensuring no stone is left unturned. For a full strategy outline, please contact us and take the next step with you digital marketing agency in Thailand.

Table of Contents

1. Check Robots.txt and XML sitemap

In order to ensure your website can be seen in the search engine results pages (SERPs), it is imperative that these two files (i.e. Robots.txt and XML sitemap) are included in the website’s coding.

Robots.txt is a basic text file that is placed on your site’s root directory and references the XML sitemap location.

It tells the search engine bots which parts of your site they need to crawl. It also stipulates which bots are allowed to crawl your site and which ones are not.

The XML sitemap is a file that contains a list of all the pages on your website. This file can also contain extra information about each URL in the form of meta data.

Alongside Robot.txt, the XML sitemap helps search engine bots crawl and index all the pages on your website.

How do you check/create a Robots.txt file with the sitemap location?

You can check Robots.txt and XML sitemap files by following these three steps:

1) Locate your sitemap URL

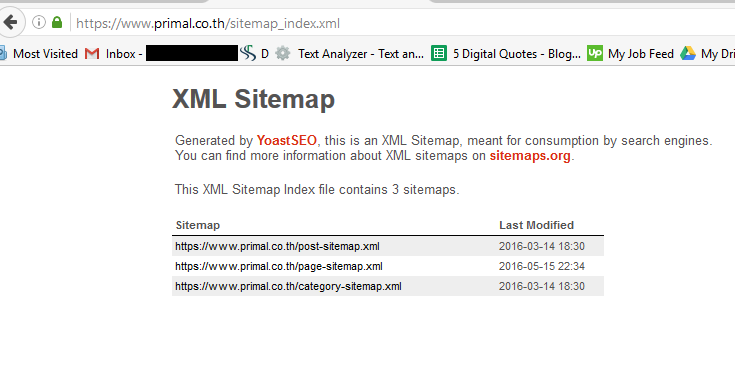

The XML sitemap can mostly be checked by adding /sitemap.xml follow to the root domain. For example, type www.example.com/sitemap.xml into your web browser.

As you can see in the above screenshot, primal.co.th has multiple sitemaps.

This is an advanced tactic that can help to increase indexation and site traffic in some cases.

The experts over at Moz.com have written in detail about the value of multiple sitemaps.

If you don’t find a sitemap on your website, you will need to create one. You can use an XML sitemap generator or utilise the information available at Sitemaps.org.

2) Locate your Robots.txt file

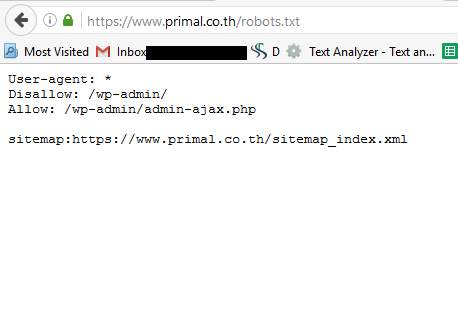

The Robots.txt URL can also be checked by adding /robots.txt follow to the root domain. For example, type www.example.com/robots.txt into your browser.

If a Robots.txt file exists, check to see if the syntax is correct. If it doesn’t exist, then you will need to create a file and add it to the root directory of your web server (you will need access to your web server).

It is usually added to the same place as the site’s main “index.html”; however the location does vary depending on the type of server used.

3) Add the sitemap location to Robots.txt (if it’s not there already)

Open up Robots.txt and place a directive with the URL in your Robots.txt to allow for auto discovery of the XML sitemap.

For example:

Sitemap: www.example.com/sitemap.xml

The robots.txt file will be:

Sitemap: www.example.com/sitemap.xml

User-agent:*

Disallow:

The above screenshot example from primal.co.th shows what www.example.com/robots.txt should look like when the sitemap has been added for auto discovery.

2. Check protocol and duplicate versions

The second stage of completing a technical SEO audit is to check all versions of the page listed below to see if they are all accessible or redirecting to the website.

- https://example.com

- https://example.com/index.php

- https://www.example.com

- https://www.example.com/index.php

It’s important to note that Google prefers sites that use HTTPS rather than HTTP. HTTPS (Secure HyperText Transfer Protocol) is essentially the secure version of HTTP (HyperText Transfer Protocol).

It is particularly important for e-commerce websites to use HTTPS as they require increased security due to shopping carts/payment systems.

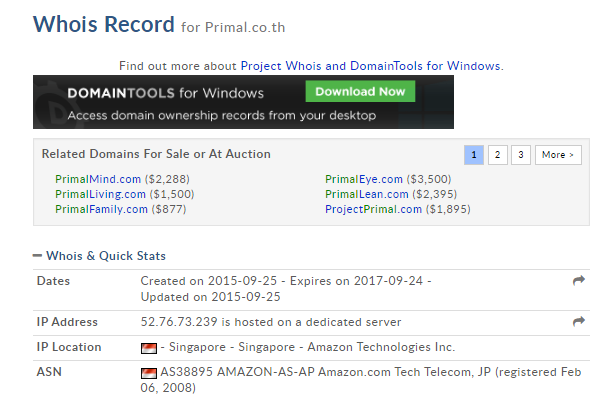

3. Check the domain age

It is important to check the domain age of a website, as this can affect SERP rankings.

Use whois.domaintools.com to:

- See if the site is old or new

- Reflect to backlink profiles (generally the older the domain, the bigger the backlink profile is going to be)

4. Check the page speed

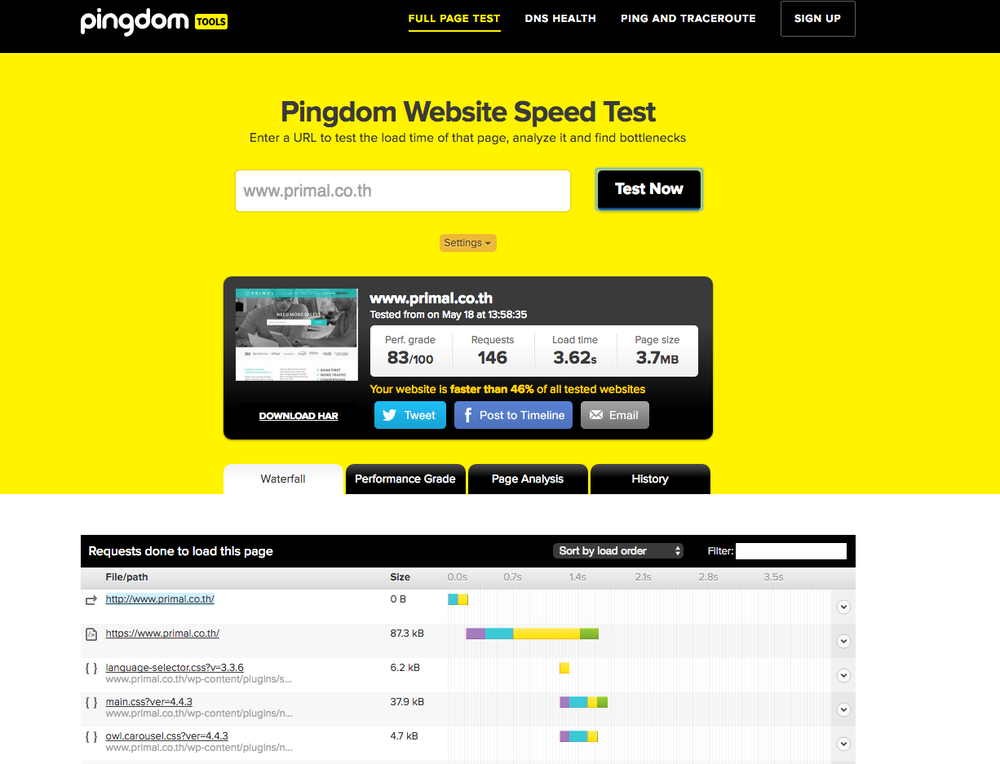

As outlined by the experts at Moz.com, site speed is one of Google’s ranking factors. Therefore, it’s important that a site’s load speed is maximized.

The load speed of a website can be quickly checked at tools.pingdom.com. This tool provides information regarding load time and how your site performs in comparison to other websites.

To improve page speed, webmasters should look at compressing images, adopting a content delivery network and decreasing the server response time.

Heavy custom coding and large image sizes can also slow down load times.

Remember to check the site speed of both the mobile and desktop version of your website.

To learn about improving page speed, visit Google’s PageSpeed Insights.

5. Check URL healthiness

There are a number of elements to check when assessing a URL’s ‘healthiness’. These include:

Page title

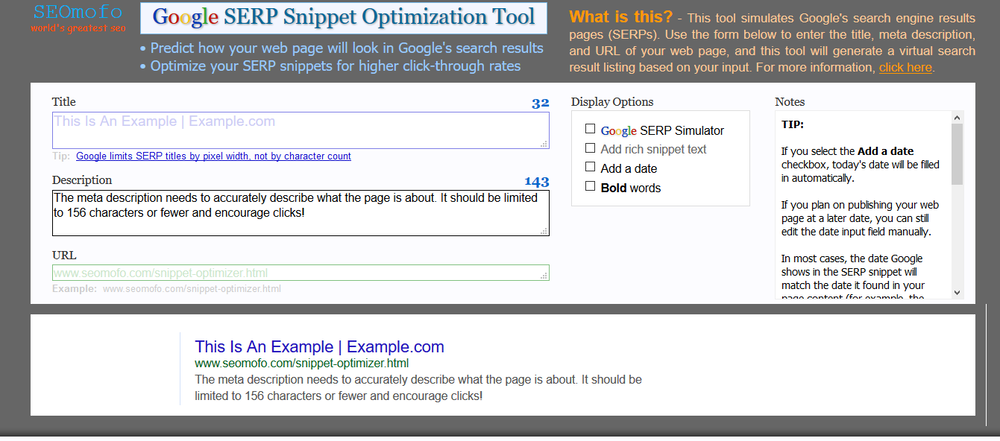

The page title (also referred to as the title tag) defines the title of the page and therefore it needs to accurately and concisely explain what the page is about.

The page title appears in the SERPs (see meta description example below) as well as in the browser tab, and needs to be 70 characters or fewer in length.

It is used by search engines and web users alike to determine the theme of a page, so it’s important to ensure it is correctly optimised with the most important (and relevant) keyword(s).

Meta description

Meta descriptions don’t directly affect SEO, but they do affect whether or not someone is going to click on your SERP listing.

Therefore, it’s important that the meta description is uniquely written and accurately describes the page in question – rather than simply taking an excerpt of text from the page itself.

A snippet optimizer tool makes it easy to create meta descriptions that are the correct length (156 characters or fewer). Note: the below tool also features the page title.

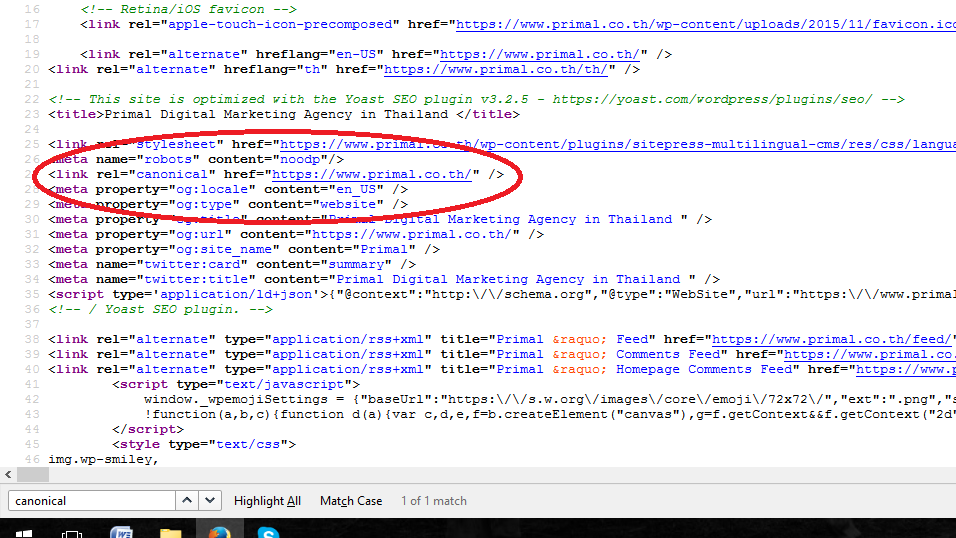

Canonicalization

It’s a long word, but basically it means to ensure a website doesn’t contain multiple version of the one page.

Canonicalization is important because otherwise the search engines don’t know which version of a page to show users.

Multiple versions of the same content also causes issues relating to duplication – and it’s therefore important to ensure canonicalization issues are addressed.

If there are multiple versions of one page, the webmaster will need to redirect these versions to a single, dominant version.

This can be done via a 301 redirect, or by utilizing the canonical tag.

The canonical tag allows you to specify in the HTML header that the URL in question should be treated as a copy, while also naming the URL that the bots should read instead.

For example:

Within the HTML header of the page loading on this URL https://thdev2.primal.website/index.php there would be a parameter like this:

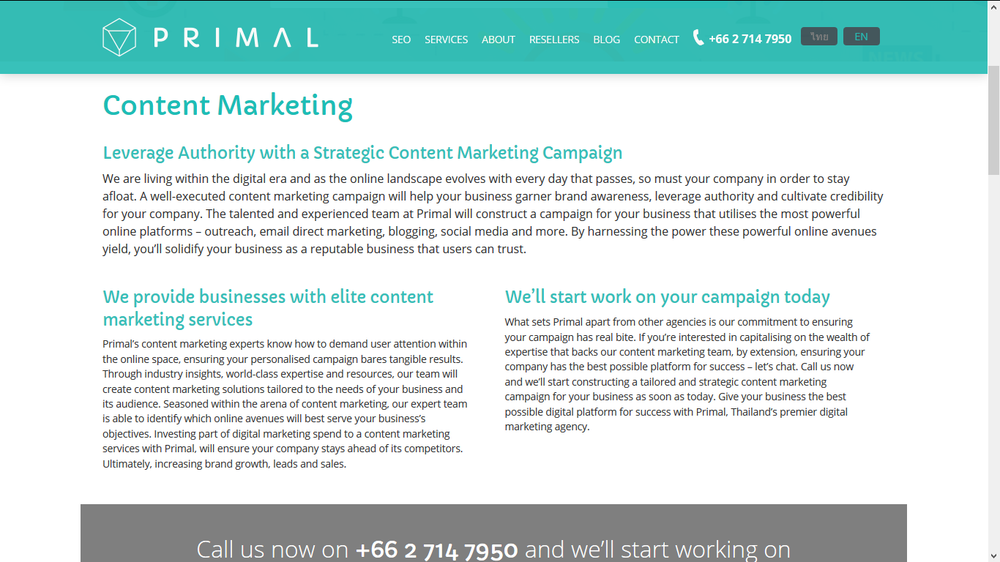

Headings (H1, H2, etc…)

When performing an SEO site audit, page headings should be checked to ensure they include relevant keywords – however they shouldn’t be over-optimised (i.e. the same keywords shouldn’t be used in multiple headings on the one page).

Make sure that headings are unique to each page so that no duplication issues arise.

Index, noindex, follow, nofollow, etc…

These meta tags tell the search engines whether or not they should index a certain page or follow links that are placed on that page.

- Index tells the search engine to index a specific page

- Noindex tells the search engine not to index a specific page

- Follow tells the search engine to follow the links on a specific page

- Nofollow tells the search engine not to follow the links on a specific page

Response codes – 200, 301, 404 etc.

Check which HTTP response status code is returned when a search engine or web user enters a request into their browser.

It’s important to check the response codes of each page, as some codes can impact negatively on user experience and SEO.

- 200: Everything is okay.

- 301: Permanent redirect; everyone is redirected to the new location.

- 302: Temporary redirect; everyone is redirected to the new location, except for any ‘link juice’.

- 404: Page not found; the original page is gone and site visitors may see a 404 error page.

- 500: Server error; no page is returned and both site visitors and the search engine bots can’t find it.

- 503: A 404 alternative; this response code essentially asks everyone to ‘come back later’.

Moz.com has some excellent information regarding response codes.

Word counts/thin content

Ever since Google released its Panda algorithm update, thin content has become a real issue for webmasters around the globe.

Thin content can be defined as content that is both short on words and light on information; think short, generic pages that don’t provide any information of real value.

It’s important to make sure that the pages on a website provide visitors (and search engines) with in-depth, useful content.

In most cases, this means providing content that is longer – e.g. at least 300-500 words or more depending on the page (note that product pages can usually get away with fewer words).

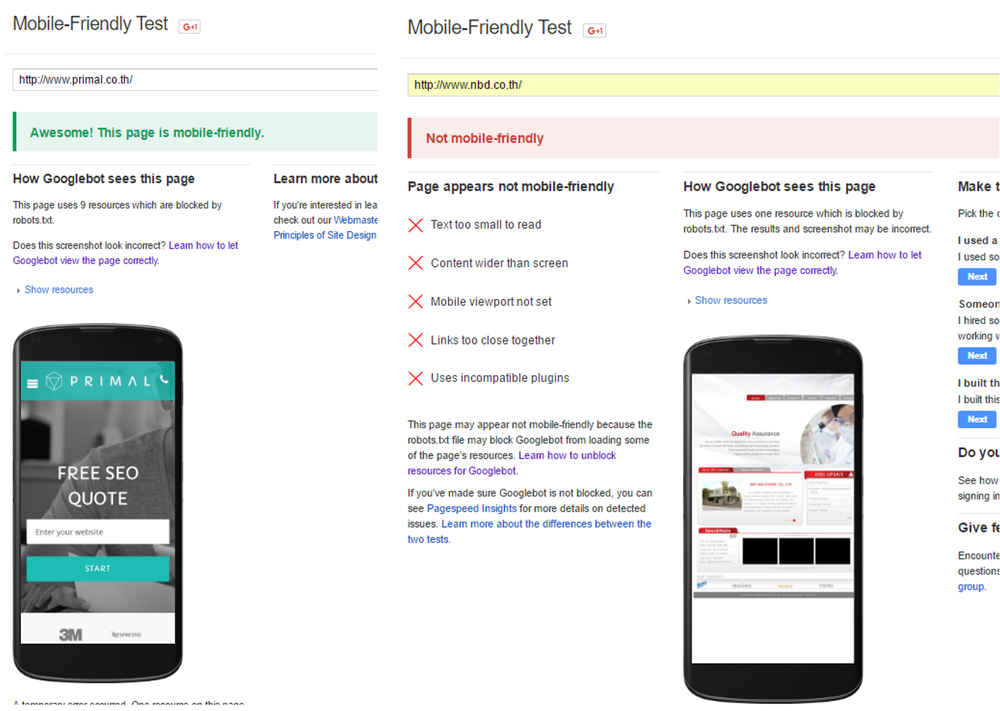

6. Check the site’s mobile friendliness

According to a comScore report released in mid-2014, internet access via mobile devices has overtaken internet access from desktops in the U.S. – and the rest of the world is heading in the same direction.

It’s also important to note that mobile friendliness is now one of Google’s ranking factors, thanks to the ‘Mobilegeddon’ algorithm update.

It’s therefore critical to ensure that a website is mobile friendly – something you can check via Google’s Mobile Friendliness tool.

This tool checks to see that the design of your website is mobile friendly.

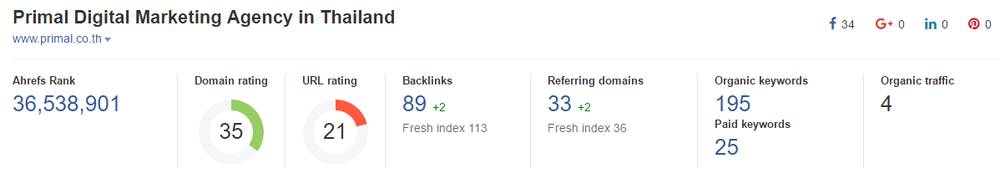

7. Check backlink profiles

Backlinks are the links on other sites that point back to the website in question; they can be seen as ‘votes of confidence’ by other web users in favour of a website.

Generally, the more backlinks a website has the better it will rank – however there are certainly some big exceptions to this statement.

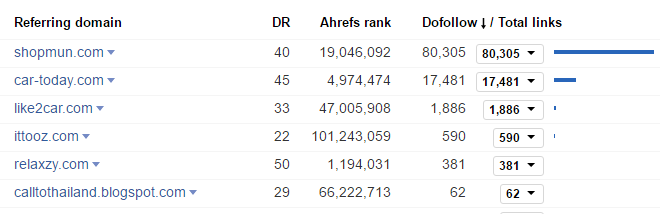

Large quantities of dofollow links coming from the one domain are frowned upon by Google, as are links coming from poor quality/unrelated websites.

In other words, effective backlinks are about quality over quantity. Unnatural backlinks can lead to a website receiving a manual penalty or being de-indexed.

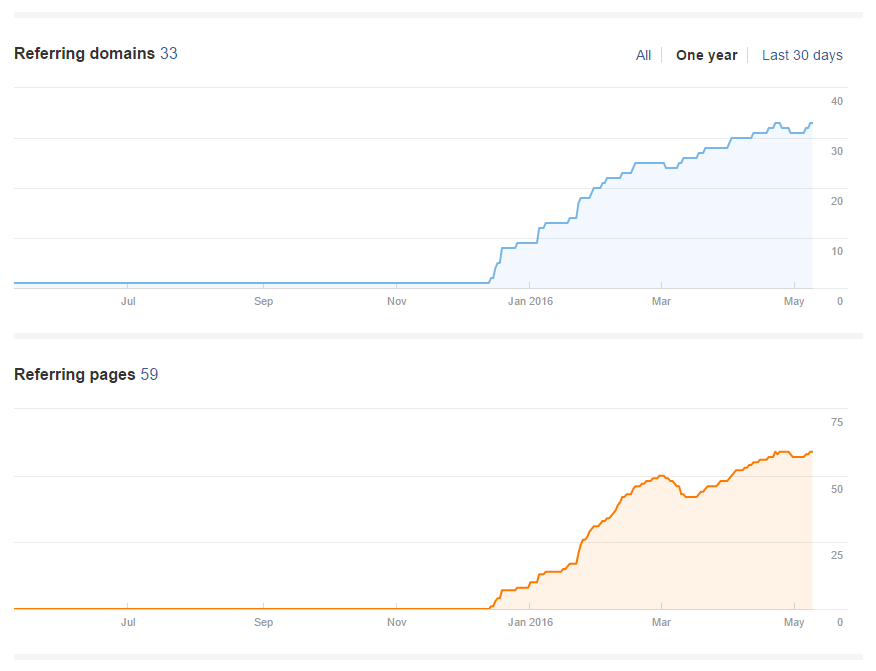

You can check the backlink profile of a website via ahrefs.com.

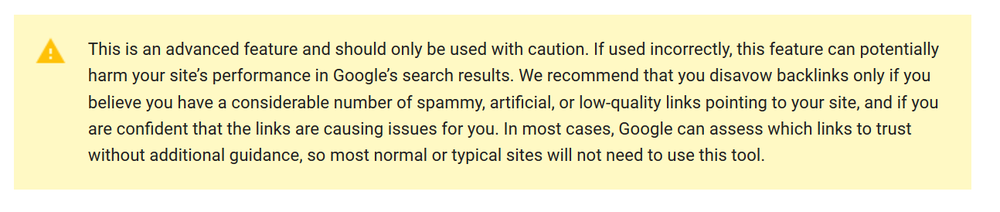

If Ahrefs is showing that there are spammy, low quality links pointing to your website (or large quantities of links pointing from one domain), then it may be necessary to perform a disavow.

Google Support offers a detailed guide that explains how to disavow unwanted backlinks. Performing a disavow is a two-step process. You will need to download a list of all the links pointing to your website, and then create and upload a file to Google that details all the links that need to be disavowed.

Ready for optimization!

Once you have performed an in-depth SEO website analysis, areas in need of improvement come to light.

It is then a matter of rectifying these elements to ensure that the site is better placed to achieve a higher SERP ranking.

Once areas of concern are addressed, the site is essentially ‘up to date’ in terms of SEO; it’s now a matter of building upon this strong foundation to boost rankings and achieve better results! For SEO in Thailand get in contact with us.

Join the discussion - 0 Comment